A few months ago, I raised a simple customer support ticket.

The chatbot replied in seconds, with all the confidence in the world, but none of the help I needed.

It proudly deflected the query.

And I walked away frustrated, but “resolved” in their dashboard.

That’s when we realized something was broken, not in the tech, but in how we measure success.

Most support systems optimize for deflection, and many queries never reach a human.

What if we flipped that metric?

What if we measured resolution happiness instead. Thai means we measure how satisfied someone feels after the issue is actually solved.

At MATH (AI & ML Tech Hub at T-Hub), we ran an experiment:

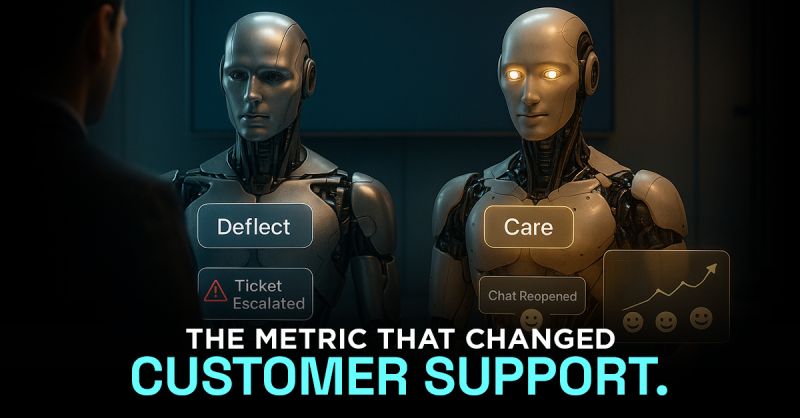

→ Take two models.

→ Train one to deflect.

→ Train the other to maximize resolution happiness (using follow-up sentiment data and reopen rates).

The second model took longer to train.

But guess what?

→ Overall CSAT went up.

→ Escalations went down.

→ And customers actually trusted the AI more.

Sometimes, the problem isn’t that AI doesn’t understand.

It’s that we’ve been rewarding it for the wrong outcome.