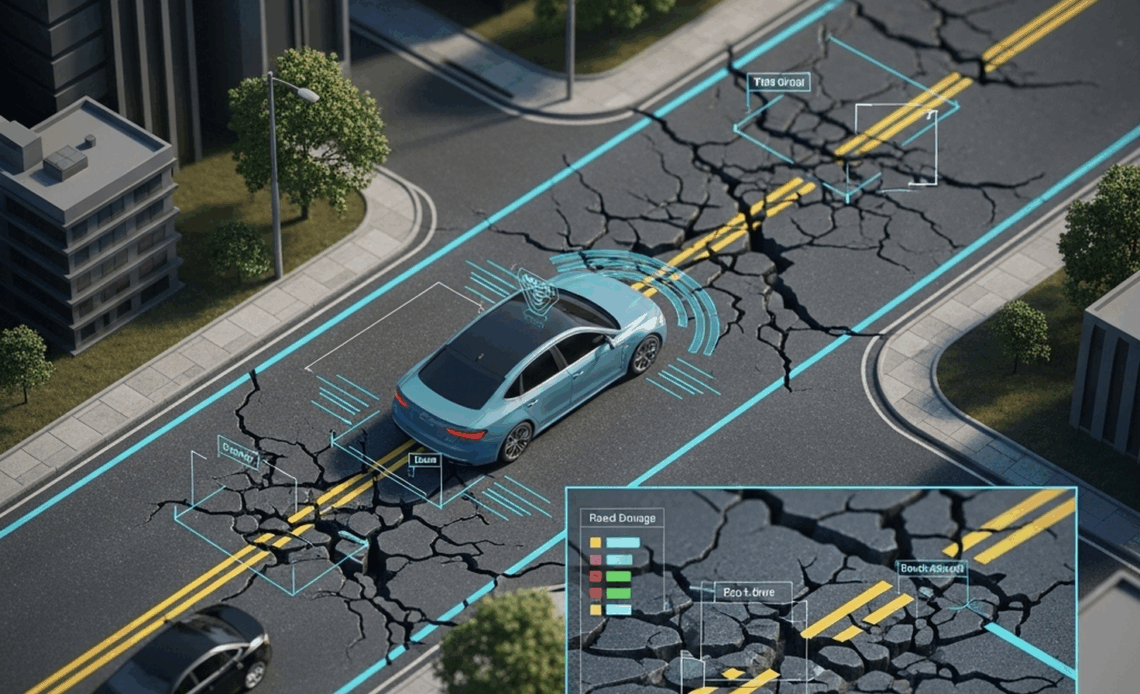

In the race toward full autonomy, it’s not just pedestrians, vehicles, and traffic signals that autonomous vehicles (AVs) need to recognize—they must also understand the road itself.

Potholes, surface cracks, scattered debris, broken signage, and faded lane markings aren’t just minor inconveniences—they’re real safety hazards. Ignoring them puts passengers, other drivers, and the AV’s navigation logic at risk.

That’s why visual learning in AV systems must go beyond object detection. It must include deep semantic comprehension of road quality. And this understanding is only possible when AV systems are trained on richly annotated visual and sensor data that captures the nuance of real-world conditions.

🔍 Key Areas Where Annotation Transforms AV Road Intelligence:

🕳️ Pothole Identification & Classification: Not all potholes are equal. Teaching AVs to recognize severity levels helps determine route safety and vehicle response.

⚠️ Detection of Cracks, Debris, and Surface Wear: Minute cracks may indicate structural stress. Debris could prompt lane shifts or braking.

🛑 Recognition of Damaged or Missing Signage: When signs are bent, obscured, or missing, the vehicle must rely on contextual cues to infer traffic rules.

🚫 Faded, Confusing, or Improper Lane Markings: Misleading road paint can cause dangerous misalignment in navigation.

🌉 Monitoring of Structural Integrity: Bridges, barriers, and guardrails should be continuously interpreted for signs of degradation or impact.

With meticulously labeled visual and sensory inputs, deep learning systems can be trained to:

✅ Adjust speed or reroute dynamically based on road quality

✅ Trigger maintenance alerts to fleet operators

✅ Feed real-time road health insights to municipal authorities

In short, we’re no longer just teaching AVs to see—we’re teaching them to understand the story the road is telling.

As autonomy scales, this capacity to interpret both the traffic environment and the physical health of the road itself will become a defining factor for both safety and efficiency.

Are we giving enough attention to these “less visible” but deeply impactful elements of the driving environment? It’s time to rethink what visibility means in the age of intelligent mobility.