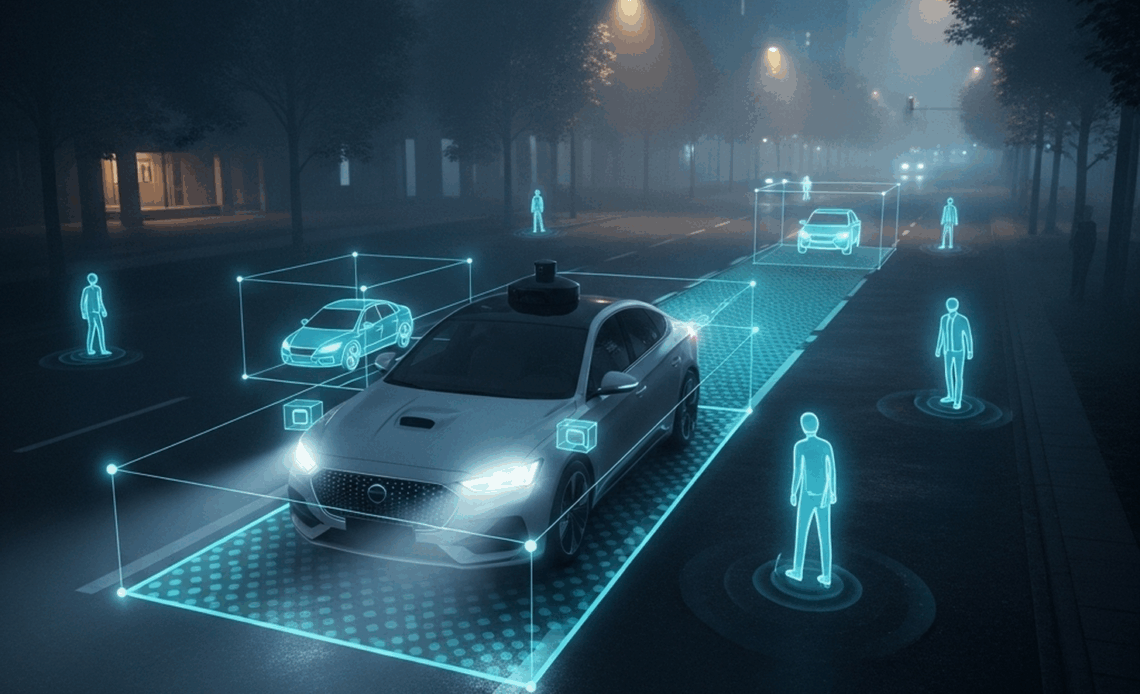

Nighttime presents one of the toughest challenges for autonomous vehicles. Low-light environments, harsh glare from oncoming headlights, faded lane markings, and sudden pedestrian movement can easily confuse even the most advanced AI models.

But the solution lies in smarter, precision-driven data annotation.

At Annotation World, we specialize in labeling these complex, high-risk scenarios to teach models how to “see” in the dark—enhancing both safety and reliability.

✨ What We Annotate for Night Driving:

✅ Bounding Boxes for detecting low-contrast pedestrians, cyclists, and vehicles.

✅ Semantic Segmentation of roads, sidewalks, signs, and surroundings under minimal lighting.

✅ Lane Detection in the toughest conditions—rainy nights, foggy highways, and poorly lit intersections.

✅ Object Tracking across video frames, compensating for flares, shadows, and reflective surfaces.

By annotating edge-case night scenes—dim alleys, wet roads, construction zones—we help build models that don’t just survive the night but thrive in it.

The result? AV systems that are safer, smarter, and more reliable—around the clock.

Annotation World is your partner in annotating high-complexity night driving datasets—so your AV model never drives blind.