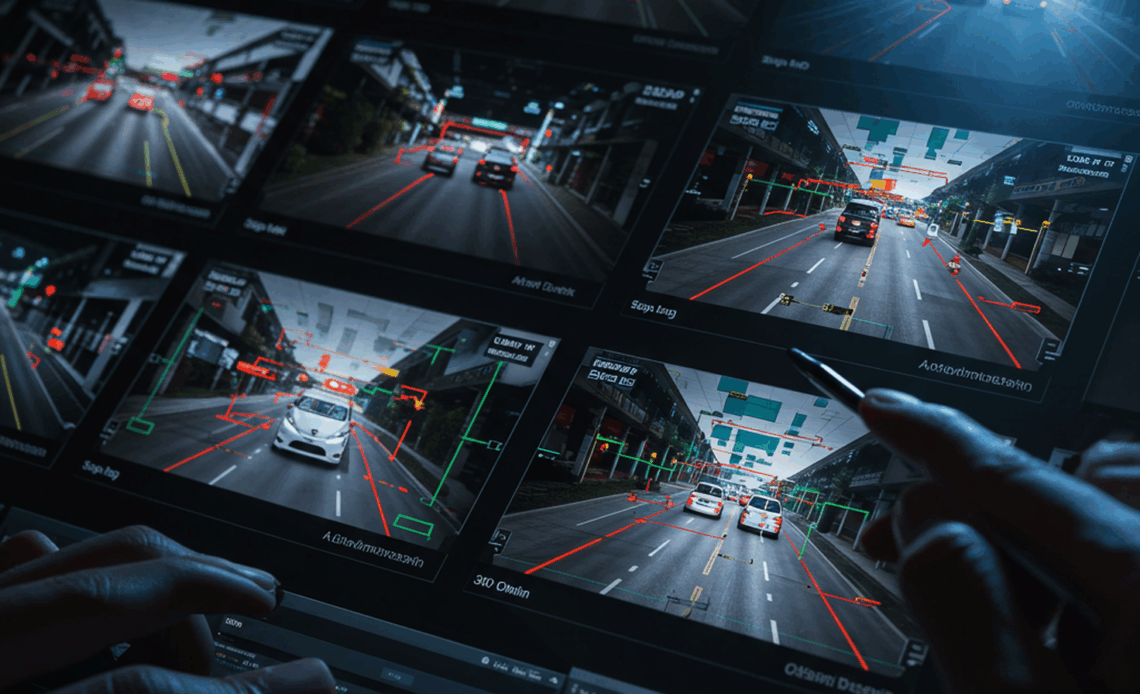

In the rapidly advancing world of autonomous vehicles, one of the most critical factors for safe and efficient navigation is the ability to perceive and interpret the surrounding environment accurately. This is where video annotation plays a transformative role.

✅ Why It Matters:

To ensure that autonomous systems can reliably identify pedestrians, vehicles, traffic signs, and other objects, precise and consistent annotation of video data is essential. It serves as the foundation for training and improving machine learning models that power perception and decision-making in self-driving technology.

How Video Annotation Supports AV Vision

🔹 Frame-by-Frame Annotation

- Video footage is segmented into thousands of still images.

- Each frame is annotated with detailed information, such as object class, location, and behavior.

- This enables the system to learn how to detect and respond to real-world elements with high accuracy.

🔹 Live-Stream Frame Annotation

- Once individual frames are annotated, intelligent algorithms apply this learning to track and interpret objects across moving frames.

- Adjustments are only needed when the system encounters uncertainties or anomalies, improving overall efficiency.

- This dynamic tracking allows vehicles to understand motion, predict object paths, and make informed navigation decisions in real time.

✅ The Road Ahead:

As autonomous driving technology continues to evolve, the quality of video annotation will play a decisive role in its advancement. From urban intersections to highways, accurate object recognition and behavior prediction are vital for creating safe and reliable autonomous systems.

What’s your take on the future of video annotation in self-driving vehicles? Let us know in the comments!